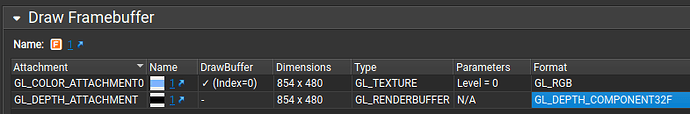

I’m currently trying to expand the OpenGL example code into something a bit more like a real game, and learning OpenGL and Odin along the way. I thought I’d switch to a floating point z-buffer, as that just seems way easier to work with than integer ones, but I have gotten lost in all the incantations that don’t work. So far I have a 16 bit integer z-buffer when rendering to the back buffer, and a 24 bit integer z-buffer attached to a buffer object, and it seems to stay that way no matter what flags I pass around.

I have tried setting the global attributes GL_FLOATBUFFERS and GL_DEPTH_SIZE, as those seem like they might be relevant, but it doesn’t seem like they change from defaults no matter when I set them.

I’m on Windows 10, which might matter according to 15 year old SO answers.

Code is here: Some buggy test code · GitHub

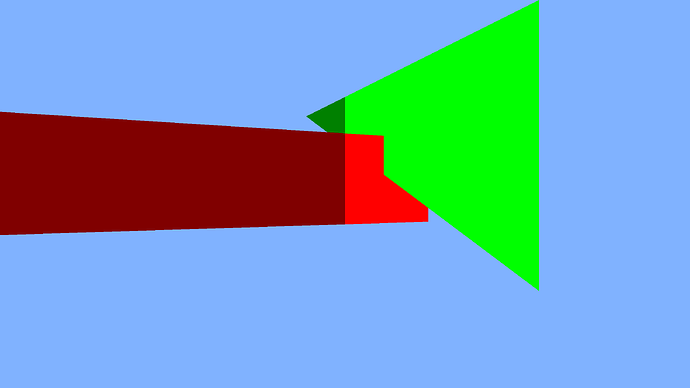

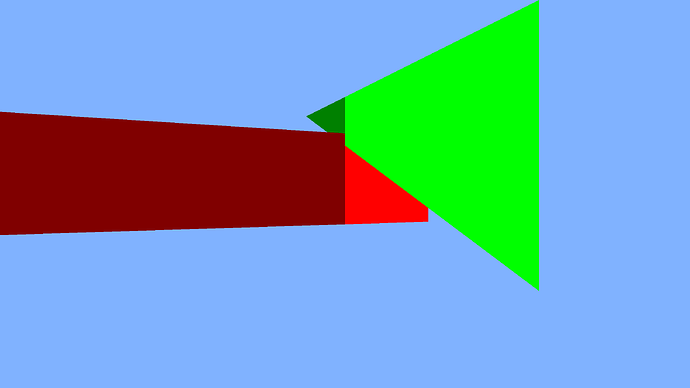

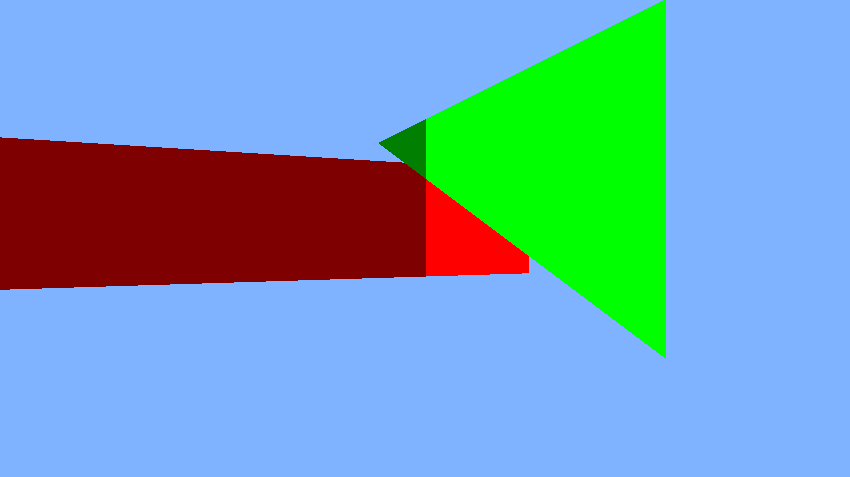

I have set it up to produce a clearly visible z error that should be fixed with a floating point z-buffer, the two triangles ought to intersect at the point where they change colour.

From what I can see, there isn’t a way to set up a floating-point depth buffer on the screen backbuffer, so GL_DEPTH_SIZE may get you a 32-bit normalized depth buffer, but I don’t think you can get a 32-bit float depth buffer for the backbuffer. GL_FLOATBUFFERS seems like it might be something different. Creating your own explicitly and using that definitely seems to be the way.

https://github.com/libsdl-org/SDL/issues/3633#issuecomment-2256643982

https://github.com/libsdl-org/SDL/pull/5999

As far as that goes, though, I don’t see any indication that the depth buffer you’re creating isn’t floating-point–NVIDIA Nsight Graphics does show it as GL_DEPTH_COMPONENT32F for me.

Are you sure that there’s actually enough precision? You’re setting the perspective far distance quite far, and perspective projections map depths very non-linearly, so it ends up that a fairly small portion of the range of the depth covers a pretty large portion of the range of clip space. Even 32-bit floats have limits. Things like reverse and logarithmic depth exist for a reason.

The whole floating point depth buffer thing should specifically allow drawing at a wide range of distances simultaneously, the output I get indicates that it is not working as intended, and setting the DEPTH_COMPONENT32F flag has exactly the same visual output as the 3 other flags I have included commented out.

In any case I’d like to know what actually happens on your computer. The thumbnail looks like nothing is being rendered, isn’t there some red and some green on your screen?

For reference I get this screen:

And without precision loss I would expect to get, see next reply.

I also get the console outputting:

fb 0

ds 16

ma 4

mi 6

Indicating that neither GL_FLOATBUFFERS nor GL_DEPTH_SIZE was set the way I tried.

I do get triangles, yes, and they just don’t intersect at all, rather than intersecting in the wrong place.

fb 0

ds 24

ma 4

mi 6

GL_DEPTH_SIZE/ds is for the backbuffer’s depth buffer, and shouldn’t impact the depth buffer that you explicitly create.

What is the expected result image from? I guess it’s just from reducing the far plane distance, as I get the same results if I reduce it.

At extremely long far plane distance you’re trying to use, there’s also the possibility that you’re running into rounding issues with the vertex positions themselves, too.

Maybe you get the error in the opposite direction of what I do, and the intersection point is simply too far left to be visible, this suggests that either they are rendered in opposite order, or the depth compare for some reason do draw on equal despite the set flag telling it not to.

It is the near plane actually, there is no far plane in this setup, the way the matrix multiplication works it is only the z component that is influenced by the near plane value, and it is just a straight scaling factor, so it shouldn’t affect the accuracy of a floating point number, unless you actually run out of exponent.

Does it make any difference if you pick another depth format on line 109-112?

Okay, I found out why from this article Outerra: Maximizing Depth Buffer Range and Precision

Turns out OpenGL is just broken, sabotaged either by extreme incompetence or malice. After shader code returns a z-value in the range -1 to 1, OpenGL remaps this value to 0 to 1, this transformation destroys the precision. nVidia introduced the glDepthRangedNV function, with the sole useful feature that you can call it with the values -1 and 1 in order to disable the remap, it was then standardised in a version that clamps both input values between 0 and 1.

It now makes sense why the z-buffer type doesn’t make a difference, the resulting range of possible numbers can all be represented exactly by everything but a 16 bit buffer.

Aha, it was fixed in 4.5, well not the DepthRange function, but they added a new function for enabling sanity: gl.ClipControl(gl.LOWER_LEFT,gl.ZERO_TO_ONE), first parameter can be used for flipping the picture upside down, second one completely does away with the negative part of depth clip space. So shove in that call and my code just works (at least on my machine).

I also noticed that my GPU and drivers (RDNA4) seemingly don’t have any z-buffer modes other than 16 bit integer and 32 bit float, if I request 24 or 32 bit integer I get a float buffer anyway.